I am very pleased to announce that after months of working here and there in the evenings voluntarily after work hours, I finally completed and presented both my demos of M3 picking lists in Google Glass and Augmented Reality at Inforum. They were a success. I showed the demos to about 100 persons per day during six days flawlessly with very positive reception. The goal was to show proof of concepts of wearable computers and augmented reality applied to Infor M3. My feet hurt.

Features

This is my second Glass app after the one for Khan Academy.

This Glass app has the following features:

- It displays a picking list from Infor M3 as soon as it’s created in M3.

- For each pick list line it shows the quantity (ALQT), item number (ITNO), item description (ITDS), and stock location (WHSL) as aisle/rack/level.

- It displays the pick list lines as a bundle for easy grouping and finding.

- It shows walking directions in the warehouse.

- It has a custom menu action for the picker to mark an item as picked and to change the status of that pick list line in M3.

- It uses the built-in text-to-speech capability of Glass to illustrate hands-free picking.

- It’s bi-directional: from M3 to Google’s servers to push the picking list to Glass, and from Google’s servers to M3 when the picker confirms a line.

- The images come from Infor Document Management (formerly Document Archive).

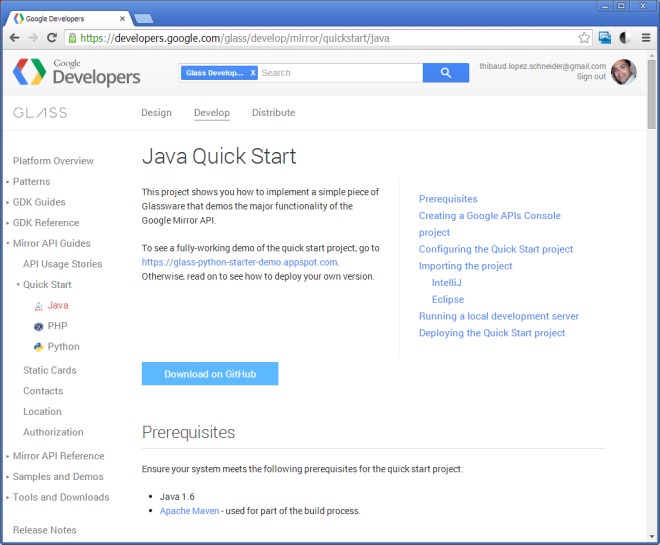

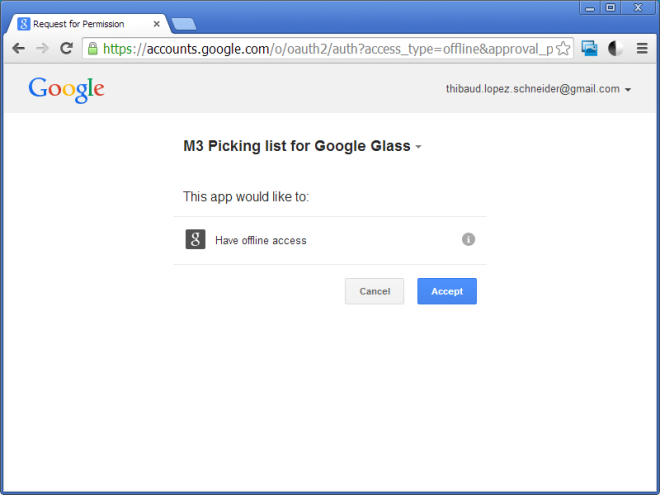

- I developed the app in Java as an Infor Grid application.

- I created a custom subscriber and added a subscription to Event Analytics to M3:MHPICL:U.

- It uses the Google Mirror API for simplicity to illustrate the proof-of-concept.

I have been making the resulting source code free and open source on my GitHub repository, and I have been writing the details on this blog. I will soon post the remaining details.

Acknowledgements

I want to specially thanks Peter A Johansson of Infor GDE Demo Services for always believing in my idea, his manager Robert MacCrate for providing the servers on Infor CloudSuite, Philip Cancino formerly of Infor for helping with the functional understanding of picking lists in M3, Marie-Pascale Authié of Infor Pre-Sales for helping me setup and create picking lists in M3 and for also doing the demo at Inforum, Zack Makris of Infor Labs for providing technical support, Jonathan Amiran of Intentia Israel for helping me write the Grid application, and some people of Infor Product Development that chose to remain anonymous for helping me write a Java application for Event Hub and Document Archive. I also want to specially thank all the participants of Inforum whom saw the demo and provided feedback, and all of you readers for supporting me. And I probably missed some important contributors, thank you too. And thanks to Google X (specially Sergey Brin and Thad Starner) for believing in wearable computers and for accelerating the eyewear market.

Screenshots

Here below are the screenshots from androidcast. They show the bundle cover, the three pick list lines with the items to pick, the Confirm custom menu action, the Read aloud action, and the walking directions in the warehouse:

Vignettes

Here below are three vignettes of what the result would look like to a picker:

Inforum

Here are some photos at Inforum:

Holding my Augmented Reality demo:

Playing around with picking lists in virtual reality (Google Cardboard, Photo Spheres, and SketchFab):

Playing around with picking lists in Android Wear (Moto 360):

That’s it! If you liked this, please thumbs up, leave a comment, subscribe to this blog, share around you, and come help me write the next blog post, I need you. Thank you!